Confusion of the Inverse

What is the safest place to be if you drive a car, closer to home or far away?

Take this statistic: 77.1 per cent of accidents happen 10 miles from drivers’ homes. You can do a Google search on this topic and read several reasons for this observation, ranging from overconfidence to distraction. So, you conclude that driving closer to home is dangerous.

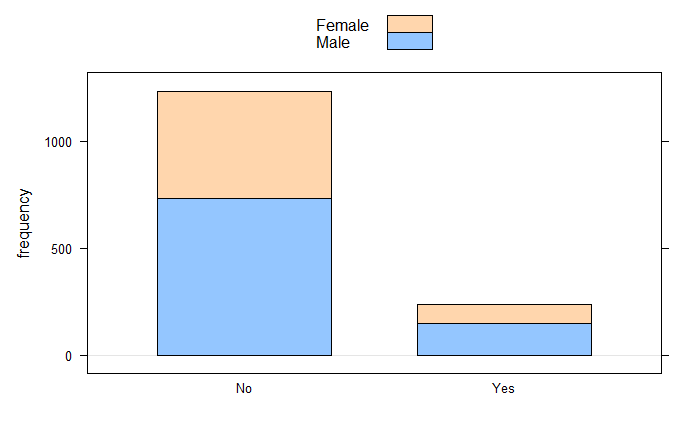

However, the above statistics are useless if you seek a safe place to drive. Because what you wanted was the probability of an accident, given that you are near or far from home, say P(accident|closer to home). And what you got instead was the probability that you are closer to home, given you have an accident P(closer to home|accident). Look at the two following scenarios. Note that P(closer to home|accident) = 77% in both cases.

Scenario 1: More drive closer to home

| Home | Away | ||

| Accident | 77 | 23 | 100 |

| No Accident | 1000 | 200 | 1200 |

Here, out of the 1300 people, 1077 drive closer to home.

P(accident|closer to home) = 77/1077 =0.07

P(accident|far from home) = 23/223 = 0.10

Home is safer.

Scenario 2: More drive far from home

| Home | Away | ||

| Accident | 77 | 23 | 100 |

| No Accident | 1000 | 500 | 1500 |

Here, out of the 1600 people, 1077 drive closer to home.

P(accident|closer to home) = 77/1077 =0.07

P(accident|far from home) = 23/523 = 0.04

Home is worse

This is known as the confusion of the inverse, which is a common misinterpretation of conditional probability. The statistics only selected the people who had been in accidents. Not convinced? What will you conclude from the following? Of the few tens of millions of people who died in motor accidents in the last 50 years, only 19 people died during space travel. Does it make space safer to travel than on earth?

Confusion of the Inverse Read More »