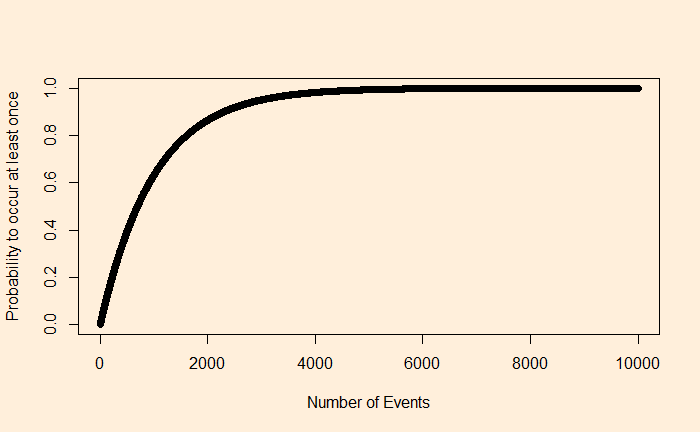

Persuasion is the act of a person (a.k.a. the sender) to convince another (the receiver) to decide in favour of the sender. Suppose the receiver is a judge and the sender is the prosecutor. The prosecutor aims to make the judge convict 100% of the defendants. But the judge knows that only a third of the defendants are guilty. Can the prosecutor persuade the judge to get more than 33% of the decisions in her favour? If the judge is rational, what should be the prosecutor’s strategy?

Suppose the prosecutor has the research report and the knowledge about the truth. She can follow the following three strategies.

Strategy 1: Always guilty

The prosecutor reports that the defendant is guilty 100% of the time, irrespective of what happened. In this process, the prosecutor loses credibility, and the judge resorts to the prior probability of a person being guilty, which is 33%. The result? Always acquit the defendant. The prosecutor’s incentive is 0.

Strategy 2: Full information

The prosecutor keeps it simple – report what the research finds. It makes her credibility 100%, and the judge will follow the report, convicting 33% and acquiring 66%. The prosecutor’s incentive is 0.33.

Strategy 3: Noisy information

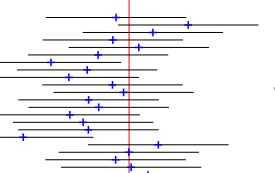

Here, when the research suggests the defendant is innocent, report that the defendant is guilty slightly less than 50% of the time and innocent the rest of the time. Let this fraction be 3/7 for guilty and 4/7 for innocent.

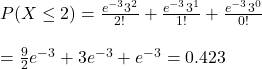

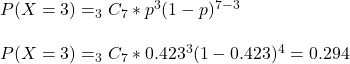

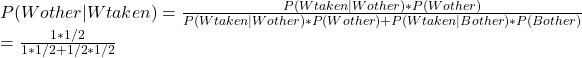

From the judge’s perspective, if she sees an ‘innocent’ report from the prosecutor, she will acquit the defendant. The proportion of time this will happen is (2/3) x (4/7) or 40%. Remember, 2/3 of the defendants are innocent! On the other hand, she will apply the Bayes’ rule if she sees a guilty report. The probability that the defendant is guilty, given the prosecutor provided a guilty report, P(g|G-R), is

P(g|G-R) = P(G-R|g) x P(g) / [P(G-R|g) x P(g) + P(G-R|i) x P(i)]

= 1 x (1/3) /[1 x (1/3) + (3/7) (2/3)]

= (1/3)/(13/21) = 0.54

The judge will convict the defendant since the probability is > 50%. So, the overall conviction rate is 100 – 40 = 60%. The prosecutor’s incentive is 0.6.

Conclusion

So, persuasion is the act of exploiting the sender’s information edge to influence the receiver’s decision-making. As long as the sender mixes up the flow of information to the judge, she can maximise the decisions in her favour, in this case, from 33% to 60%.

Emir Kamenica and Matthew Gentzkow, American Economic Review 101 (October 2011): 2590–2615

![]()