A Pair of Aces

Here is a simple probability problem that can baffle some of you. I have two decks of well-shuffled cards on the table. Take out the top card of each pack. What is the probability that at least one card is an ace of spade?

The probability of finding an ace of spades from the first deck is 1 in 52 and the same in the second deck is 1/52. Since the two decks are independent, you add the probability, i.e., 2/52 = 1/26. Right? Well, the answer is not correct! So what about (1/52)x(1/52) = 0.00037? That is something else; the probability of finding both the top cards is aces of spades. But we are interested in at least one.

You obtain the correct answer as follows:

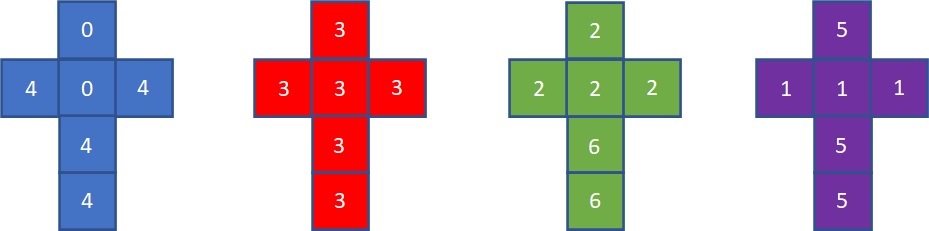

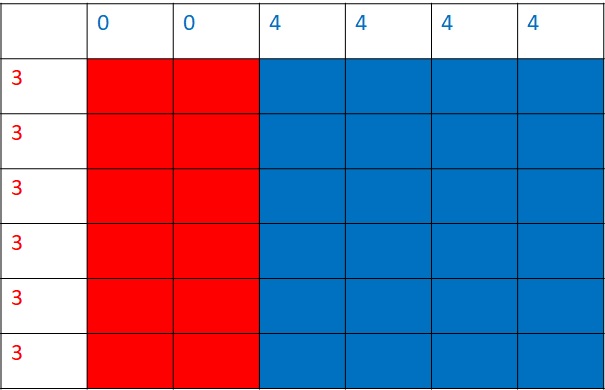

The probability of finding no ace of spades on the top of the first deck is 51/52. The same is the chance of seeing none from the second deck. Therefore, the probability of catching no ace of spades from either deck is (51/52)x(51/52). The probability of obtaining at least one equals 1 – the probability of getting none. So, it is 1 – (51/52)x(51/52) = (52 x 52 – 51 x 51)/ (52 x 52) = 103/2704 = 0.0381.

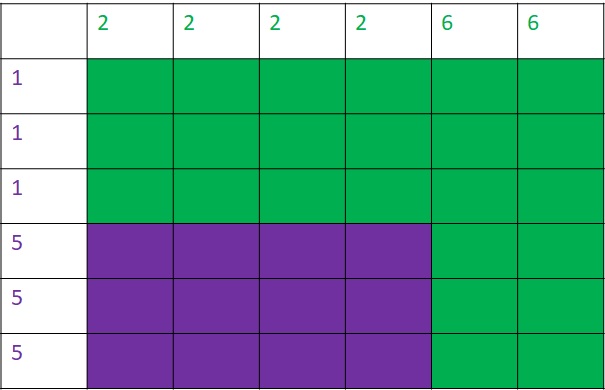

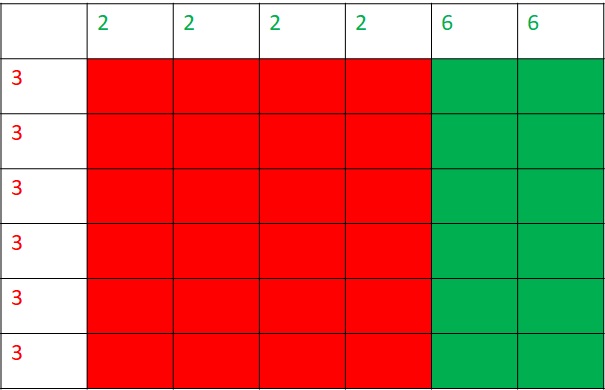

Another way of understanding this probability is to estimate all the possible ways of pairing two cards, one from each deck, containing at least one ace of spades and then dividing it by the total number of pairs. Taking an ace from the first deck, you can have 52 combinations from the second and vice versa. So there are 52 + 52 = 104 combinations. Then you have to remove the double counting two aces, reaching 103. The total number of pairs is 52 x 52 = 2704. That leaves the required quantity to be 103/2704.

The Monty Hall Problem: Jason Rosenhouse