Waiting-Time Paradox

Have you ever wondered why you always had to wait longer at the bus stop? If you agree with that but feel it was more of a mental thing than anything else, hold on a bit. There are chances that your feeling is valid and may be explained using probability theory.

Remember the inspection paradox?

See the previous post if you don’t know what I am talking about. The waiting time paradox is a variation of the inspection paradox. And we will see how, so brush up on your probability and expected value basics. As a one-line summary, the expected value is the average value!

A bus every 10 minutes

You know a bus for your destination comes every 10 minutes at a bus stop. In other words, six buses every hour. You start with the premise that the average waiting time is five minutes, assuming you randomly reach the stop. One day, at one minute for the next bus (waiting time 1 min) or another day, a minute after the last bus (waiting time = 9 min), etc.

Do not forget that buses also come with certain uncertainties (unless your pick-up is at the starting station). Now, let’s get the arrival times of this bus at a given stop (at some distance after the starting point). I can make them up for illustration but will, instead, resort to the Poisson function and get random time intervals between two consecutive buses.

Here they are: made using the R code, “rpois(6, 10)“: 10, 10, 11, 3, 10, 16. These are minutes, inside an hour, between buses, created randomly. The code [rpose(N = sample Size, lambda = expected interval)] randomly generated 6 intervals at a mean value 10.

The average waiting times inside these six slots are 10/2, 10/2, 11/2, 3/2, 10/2 and 16/2. You know what happens next.

Probabilities and expectations

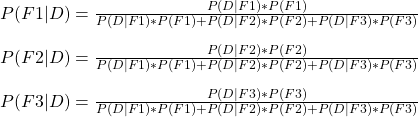

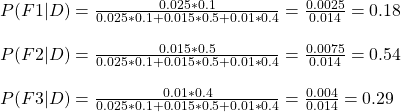

Compute your probability of catching a bus during that hour. They are 10/60, 10/60, 11/60, 3/60, 10/60 and 16/60. Each number on the numerator corresponds to a slot and a time between two buses, which equals the respective waiting time, as described in the previous paragraph.

The expected value (average waiting time) = Probability x average waiting time corresponding to that probability = (10/60) x (10/2) + (10/60) x (10/2) + (11/60) x (11/2) + (3/60) x (3/2) + 10/60 x (10/2) + (16/60) x (16/2) = 5.72.

Average is > 5 minutes

The following code summarises the whole exercise.

number_of_buses <- 100

avg_wait_buses <- 10

bus_arrive <- rpois(number_of_buses, avg_wait_buses )

prob_bus_slot <- bus_arrive/sum(bus_arrive)

avg_wait_slot <- bus_arrive / 2

Expected_wait <- sum(prob_bus_slot*avg_wait_slot)Waiting-Time Paradox Read More »