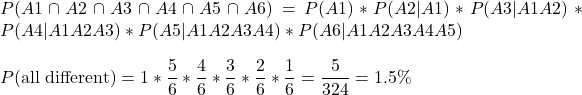

Path of a Random Walker

Let’s demystify the concept of probability games and the law of large numbers with coin-tossing as an example.

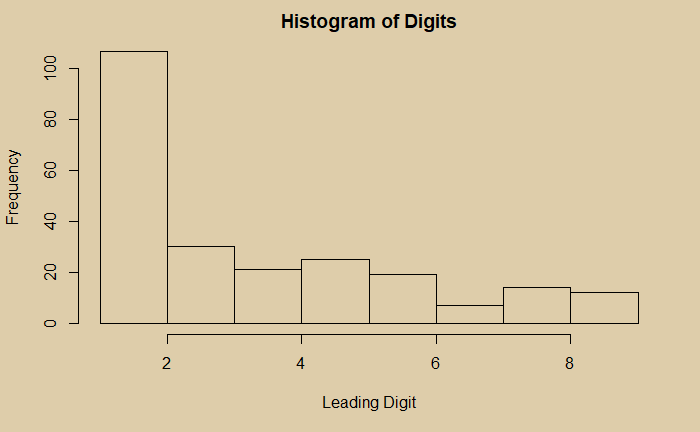

Law of large numbers

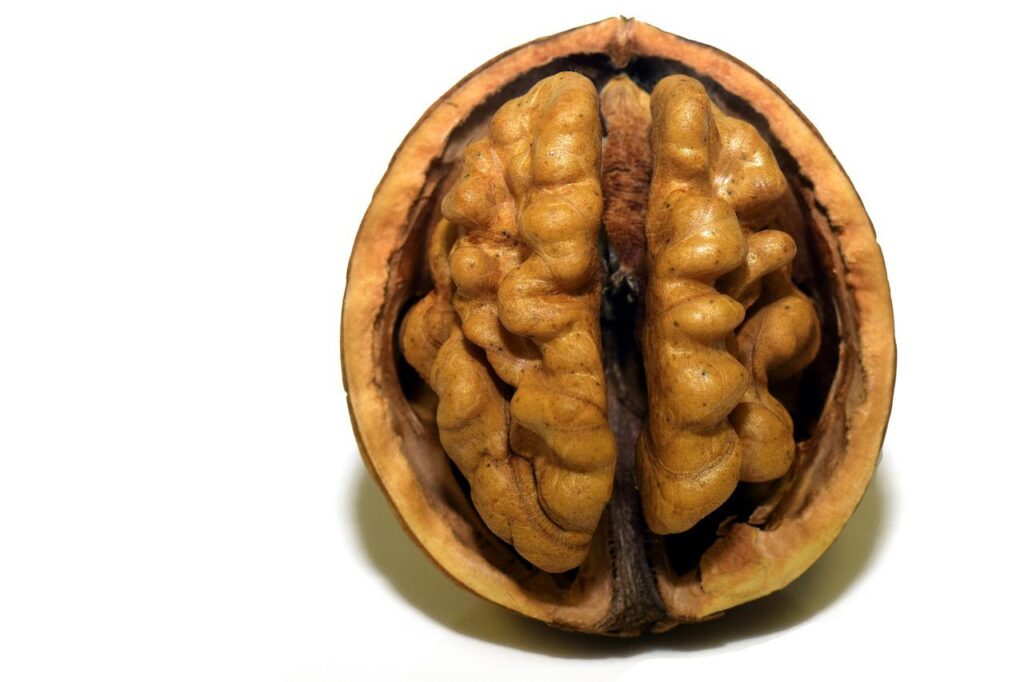

We know what it means: when one estimates the mean of several thousand outcomes of an experiment, the result converges to the expected value or the theoretical average. For example, if one tosses a coin a million times, the mean head becomes 0.5 or 50%. Recall the plot we developed earlier.

But that is deceptive. It gives a feeling that if you play a million games, with 1$ for a head and -1$ for a tail, your wallet remains unchanged, whereas if the game is short, you gain or lose more money. That is not true.

Higher absolute, lower average

In the coin tossing game, how do you calculate the average head? It is the number of occurrences of heads divided by the number of games played. If I play only one game and get a head, it is 1, and tail, it is 0, the two extreme average values you can ever get (1/1 and 0/1). If it was a game described in the earlier section, you may gain a dollar or lose one. On the other hand, if you play 100 games and win 60, you get 20 dollars (60-40), and the mean head is 60/100 = 0.6. If you play a million games and win 184 more heads than tails, the mean is even smaller and close to the theoretical (500092/1,000,000 = 0.50009). But you gain more money ($ 184) and can also lose more money.

Random walk

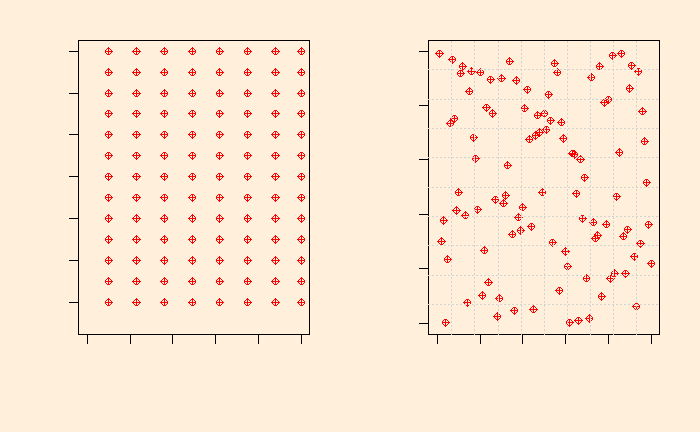

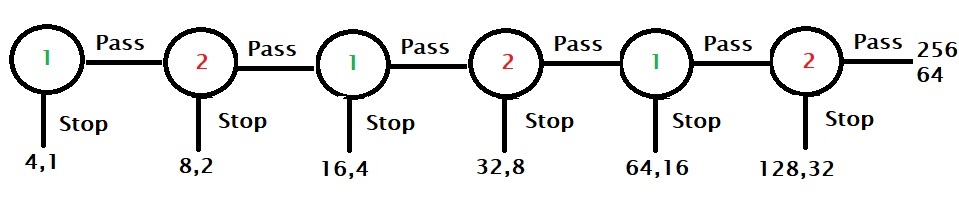

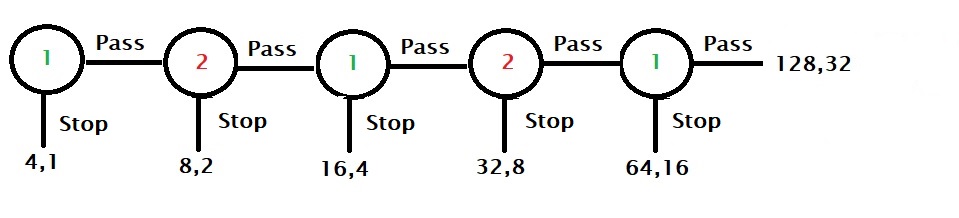

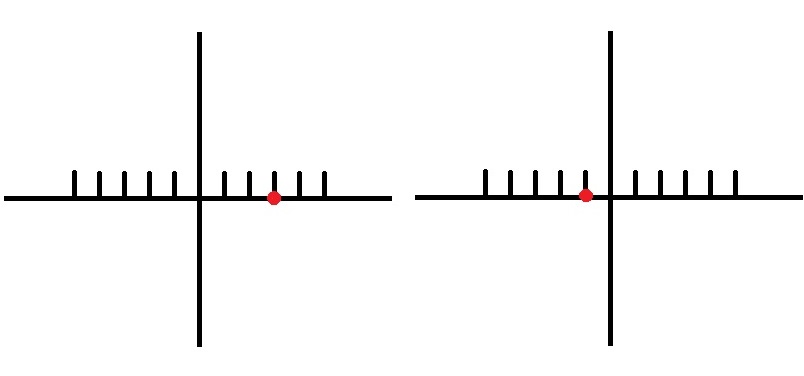

The figure we saw in the earlier section showed the average and not the absolute gain. To display the successes and flops of the games played, you resort to a random walk model. Imagine you are standing at the intersection of the X and Y axes, i.e. at zero. You play, and if you win, you move one unit to the right, and if you lose, you move one to the left.

Here are two such walks. On the left, the walker had the following outcomes: H, H, T, H, H. This is equivalent to 1, 1, -1, 1, 1 in terms of money and 1, 2, 1, 2, 3 in terms of position from the origin. The corresponding moves of the right walker are (outcome H, H, T, T, T), (money 1, 1, -1, -1, -1) and (position 1, 2, 1, 0 -1).The last digit of the position vector is the current position after five games.

To sum up

To sum up: the law of large numbers doesn’t mean if you play a large number of coin-tossing games, you win or lose nothing. It only means that the effort you put in (gain per game or the money per time) diminishes with games. The walker moves further and further from the origin, although the rate of change becomes slower. We will see more on the random walks in the next post.

Path of a Random Walker Read More »