Scoring Own Goals

Yesterday was a disastrous night for Leicester City’s Wout Faes, who scored not one, but two own goals for the opponents, Liverpool, that too at a time when his team was leading!

What is the probability?

A quick search online suggests that based on the last five seasons of the English Premier League for football, there is about a 9% chance of scoring an own goal in a match. Assuming the own-goals spread randomly as a Poisson distribution with an expected value (lambda) of 0.09 (9%), we can write down the following code a get a feel of how they distribute in e year. Note that there are 380 matches in a season.

plot(rpois(380,0.09), ylim = c(0,2), xlim = c(0,400), xlab = "Match Number", ylab = "Number of Own Goals", pch = 1)

The plot is just one realisation, and because the process is random, there are several possibilities of having own goals (0, 1, 2 or > 2) in a season.

1000th own goal last year

The following calculations are for the average number of own goals in the history of the premier league (the completed 30 seasons).

itr <- 10000

own_goal <- replicate(itr, {

epl <- rpois(380*27+420*3,0.09)

sum(epl > 0 )

})

mean(own_goal)

mean(own_goal)/30The output below is not far off from what was observed (ca. 33 goals in a season and the 1000th goals scored last year).

991.0816

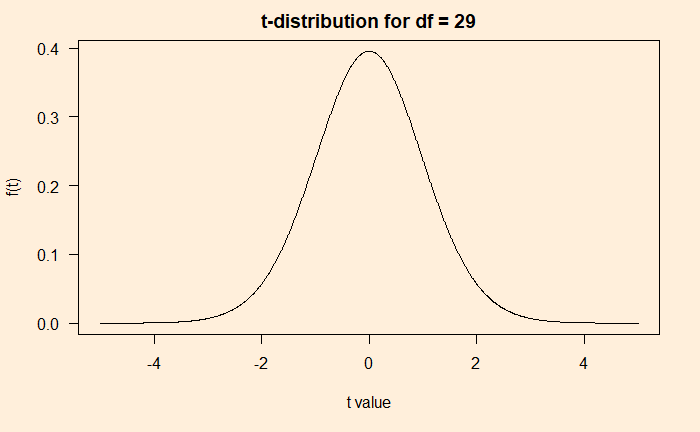

33.03605How rare are two?

itr <- 10000

own_goal <- replicate(itr, {

epl <- rpois(380,0.09)

sum(epl == 2 )

})

mean(own_goal)The expected value of having two own goals in a match is ca. 1.3 per season; for reference, the 2019-20 season had one occurrence.

Next, what is the expected value for two-own goals committed by one team?

epl <- rpois(380*2,0.09/2)Extending it further: the probability of both goals being scored by the same player in a match becomes 0.09/20 (excluding the goalkeeper). The following code brings out the expected number of instances that a player scores two own goals in a match until the end of last season, i.e., 428 x 3 + 380 x 27 matches.

itr <- 10000

own_goal <- replicate(itr, {

epl <- rpois((380*27+420*3)*20,0.09/20)

sum(epl == 2)

})

mean(own_goal)The answer is 2.3. The actual number stood at 3 at the end of last season (Jamie Carragher (1999, Liverpool vs Manchester United), Michael Proctor (2003, Sunderland vs Charlton) and Jonathan Walters (2013, Stoke vs Chelsea)).

References

How Common Are Own Goals:

1000 own goals: Guardian

Liverpool-Leicester City: BBC

Most Premier League own goals: The Sporting News

Premier League Stats