Bayes Factor and p-Value Threshold

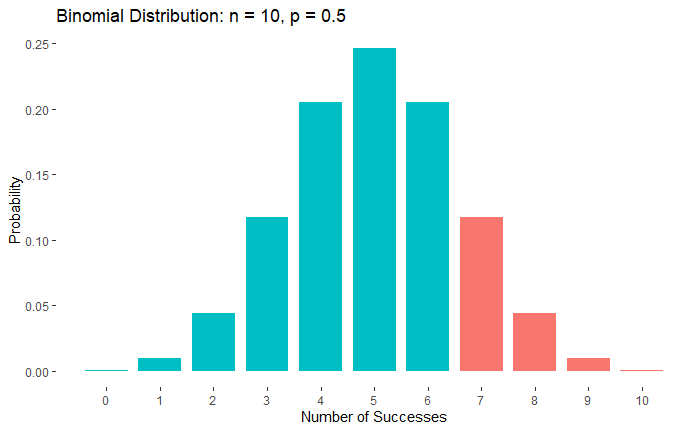

Blind faith in p-value at 5% significance (p = 0.05) has contributed to a lack of credibility in the scientific community. It has affected the class, ‘discoveries’ more than anything else. Although the choice of threshold p-value = 0.05 was arbitrary, it has become a benchmark for studies in several fields.

It is easier to appreciate the issue once you understand the Bayes factor concept. We have established the relationship between the prior and posterior odds (of discovery) in an earlier post:

Posterior Odds = BF10 x Prior Odds

Studies show that the prior odds of typical psychological studies are 1:10 (H1 relative to H0). For clarity, H1 represents the hypothesis leading to a finding, and H0 is the null hypothesis. In such a context, a p-value, which is equivalent to a Bayes factor of ca. 3.4, makes the following transformation.

Posterior Odds = 3.4 x (1/10) = 0.34 ~ (1/3); the odds are still in favour of the null hypothesis.

On the other hand, if the threshold p is 0.005 (equivalent to a BF = 26),

Posterior Odds = 26 x (1/10) = 2.6 (2.6/1); more in favour of the discovery.

Reference

Redefine statistical significance: Nature human behaviour

Bayes Factor and p-Value Threshold Read More »