The Netflix documentary, ‘What the Health’, may belong to a class of faulty reasoning known as propaganda. Let’s look at some of the logical fallacies committed by the program.

The documentary intends to promote Veganism, which, I think, is fair. Food accounts for about 25% of greenhouse gas emissions, of which meat occupies half. However, the tactics used by the producer of the film range from cherry-picking to total misinformation.

Meat and cancer

The program begins with the infamous connection between processed meat and (colorectal) cancer, which comes from the 2015 findings in the International Agency for Research on Cancer (IARC). One main suspect is the production of polycyclic aromatic hydrocarbons (PAHs) during cooking by panfrying, grilling, or barbecuing. This has led to the classification of processed meat in Group 1 (Carcinogenic to humans) and red meat in Group 2A (Probably carcinogenic to humans) as per IARC.

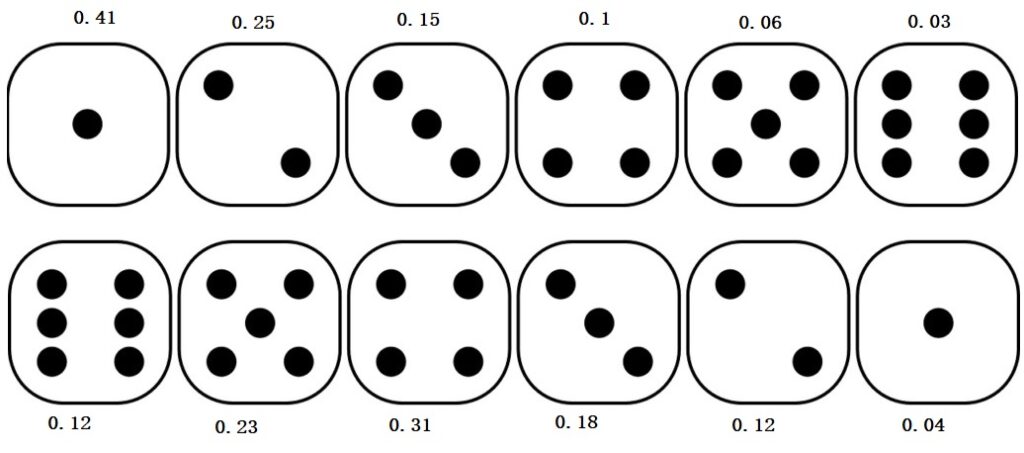

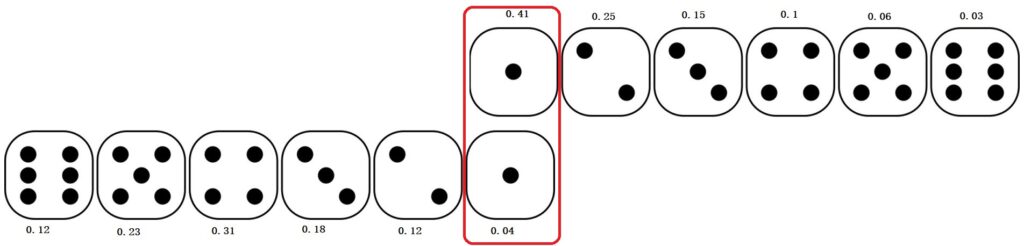

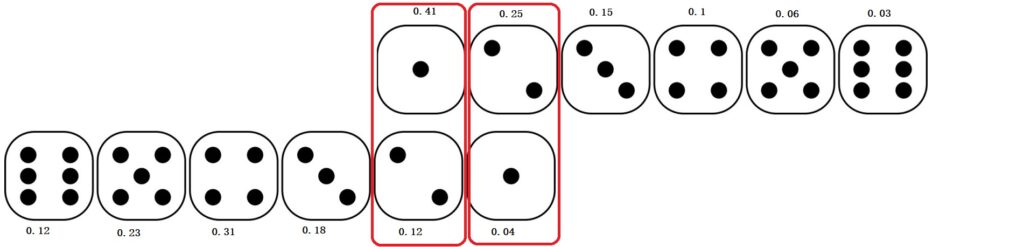

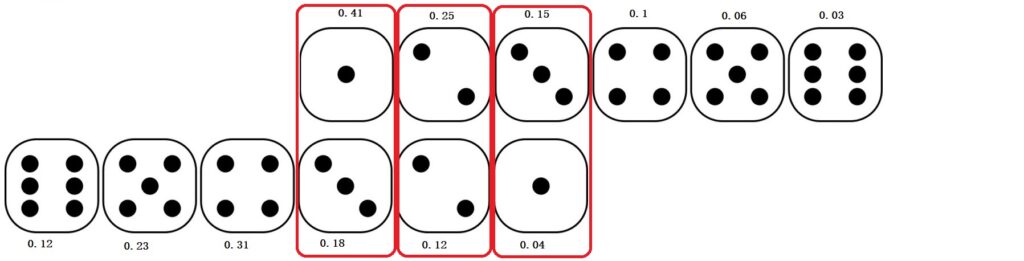

Statistics of the low base

We already know the background of the study and what an 18% increase means. In simple language, the average prevalence of colorectal cancer (5 in 100) becomes 6 for meat eaters. As a comparison, smoking makes the lifetime risk of lung cancer 17.2 in 100 vs. 1.3 in 100 for non-smokers – a 1000% increase.

Appeal to fear

The program also chooses some of the fellow 126 candidates, such as Plutonium, Asbestos and cigarettes, to emphasise the seriousness of Group 1. On the other hand, it conveniently forgets that alcoholic beverages, areca nuts and solar radiation are a few other items on the same list. To reiterate, the items in one group do not have the same risk. A place in Group 1 only means the association (with cancer) is established for that item and nothing about the absolute risk.

Sugar-coated binary

The film then argues with the help of a few ‘experts’ that sugar, considered many as a problem molecule, plays no role in diseases such as diabetes. Such creation of the innocent-other to demonise the intended subject was totally unnecessary.

Missing the balances

The documentary slips into propaganda because it misses the balance. There is no debate here about the need to incorporate more plant-based diet and exercise in the lifestyle. It is also important to have the right amount of micronutrients and protein in the diet, which may include meat, egg and dairy products.

The documentary is propaganda as it primarily appeals to emotion. The objective is to form opinions rather than increase knowledge. It uses strategies such as cherry-picking, appealing to fear and misinformation.

References

IARC Report on Processed Meat

Known Carcinogens: Cancer.org

Carcinogenicity of Processed Meat: The Lancet Oncology

How common is colorectal cancer: cancer.org

Carbon Footprint Factsheet: umich

Climate change food calculator: BBC

IARC Classifications: WHO

IARC Group 1 Carcinogens: Wiki

Lung cancer by smoking: Pub Med

![Rendered by QuickLaTeX.com \\ H = \sum\limits_{x=0}^{n} p(x) log_2[\frac{1}{p(x)}] \\\\ = 1 * log_2[\frac{1}{1}] + 0 * log_2[\frac{1}{0}] = 0](https://thoughtfulexaminations.com/wp-content/ql-cache/quicklatex.com-71946fe8b18e9e4a7d355da917b21654_l3.png)