Three Prisoners

A, B and C are jailed for a serious crime. They are in separate cells. They came to know that one of them would be hanged the next day, and the other two would be free, based on a lottery. A learns that the lot was already drawn and asks the jailer if he is the unlucky one. The jailer won’t tell that but can give one name other than A, who will be free. Does A benefit from the information?

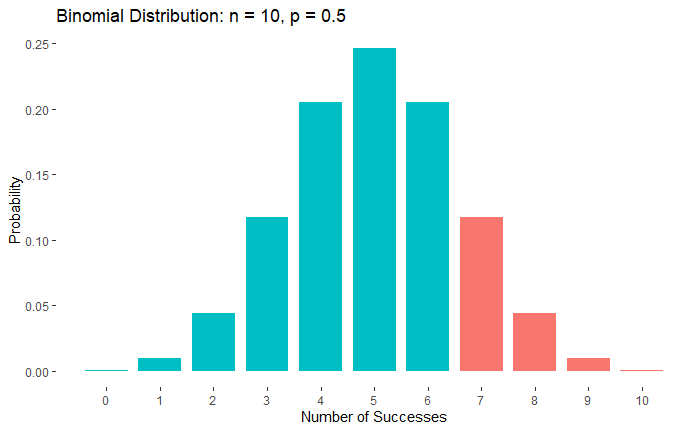

The sample space for release pairs is AB, AC, and BC, each carrying 1/3 probability. The probability that A is released is 2 out of these 3 = 2/3.

When A asks the question, the following scenarios can happen from the warden’s perspective with the respective probabilities.

1. A and B are released, but the warden says B. The probability is 1/3 x 1. The first part is the probability of AB, and the second part is naming B, as the warden can’t say A’s name.

2. A and C are released, but the warden says C. The probability is 1/3 x 1

3. B and C are released, but the warden says B. The probability is 1/3 x 1/2

4. B and C are released, but the warden says C. The probability is 1/3 x 1/2

Here, there are two scenarios where the warden can say B (1 and 3). Only one involves A. Therefore, given the warden says B, the probability for A to be free is case 1/(case 1+ case 3) = 1/3 / (1/3 + 1/6) = 2/3. A gets no benefit from asking for the name.