Risk ratio and Odds Ratio

What is the risk of you getting lost in the barrage of jargon used by statisticians? What are the odds of the earlier statement being true? Risks, odds and their corresponding ratios are terms used by statisticians to mesmerise non-statisticians.

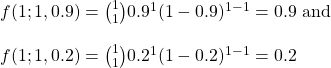

Risk is probability, p

In medical studies, the phrase risk means probability. For example: if one person has cancer in a population of 1000 people, we call the risk of cancer in that society is (1/1000) or 0.001. For coin flipping, our favourite hobby, the risk of having a head is (1/2) = 0.5, and for rolling a dice, the risk of getting a 3 is (1/6) or 0.167. You may call it the absolute risk because you will see something soon that is not absolute (called relative), so be prepared.

Odds are p/(1-p)

Odds are the probability of an event occurring in a group divided by the probability of the event not occurring. Odds are the favourite for bettors. The odds of cancer in the earlier fictitious society are (1/1000)/(999/1000) =0.001. The number appears similar to the risk, which is only a coincidence due to the small value of the probability. For coin tossing, the odds of heads are (0.5/0.5) = 1, and for the dice, (0.167/0.833) = 0.2. Conversely, the odds of getting anything but a 3 in dice is (5/6)/(1/6) = 0.833/0.167 = 5.

Titanic survivors

| Sex | Died | Survived | Risk |

| Men | 1364 | 367 | 1364/(1364+367) 0.79 |

| Women | 126 | 344 | 126/(126 + 344) 0.27 |

| Sex | Death | Survival | Odds |

| Men | 0.79 | 1-0.79 | 0.79/(1-0.79) 3.76 |

| Women | 0.27 | 1-0.27 | 0.27/(1-0.27) 0.37 |

The risk shuttles between 0 and 1; odds, on the other hand, it is 0 to infinity. When the risk moves above 0.5, the odds crosses 1.

Now, the ratios, RR and OR

Risk Ratio (RR) is the same as Relative Risk (RR). If the risk of cancer in one group is 0.002 and in another is 0.001, then RR = (0.002/0.001) = 2. The RR of losing dice rolling to coin tossing is (5/6)/(1/2)= 1.7. In the titanic example, the RR (between men and women) is (0.79/0.27) = 2.93.

The Odds Ratio (OR) is the ratio of odds. The odds ratio for titanic is (3.76/0.37) = 10.16.

Risk ratio and Odds Ratio Read More »