Bouquets and Brickbats

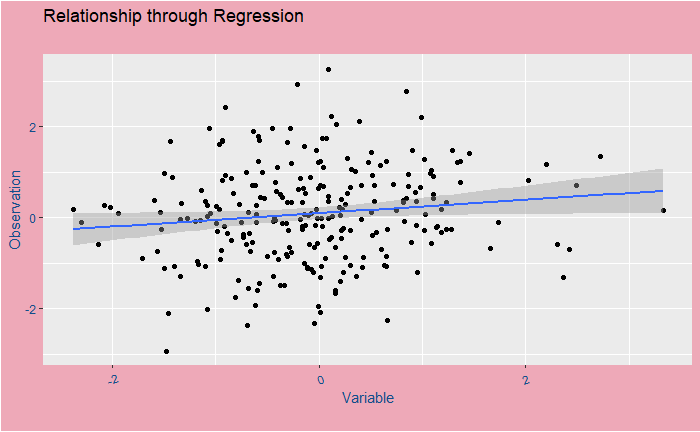

Regression to mean misleads a lot of us. We have seen the concept of regression before. In simple language: most of our superlative achievements and miserable falls are statistical, although you may like to credit them to your superior skillset or discredit to utmost stupidity. With statistical, I did not mean sheer luck but something milder; more like, ‘perhaps unexpected, but not improbable‘.

In flight training, the experienced trainers think that praise after an incredibly smooth landing follows a poor landing, and harsh criticism after a poor landing leads to improvement. They believe in this because 1) it fits with some old-generation stereotypes, 2) that’s what they see (but how often?) or 3) memories of such instances persist longer.

Regression to mean suggests that an above-the-average data point has more chance to be succeeded by something lower-than-the-average. Well, is that not why something called an average exists? Look at this problem differently. What is the probability that a given performance is outstanding? It has to be less than one and likely closer to zero; else, the dictionary meaning of the word outstanding will require a new definition. Having one such incident occurred, what is the chance of one more such event (or even rarer) to occur? It will be a product of two fractions, and the resulting number will be even smaller.

What happened here is a failure to understand how probability and regression work. So next time, if Lebron’s son doesn’t become a first-round pick, don’t blame the chap. What happened to him was normal, whereas what occurred to his father was rare!

Tversky, A.; Kahneman, D., Science, 1974 (185), Issue 4157, 1124-1131

Bouquets and Brickbats Read More »