The 17 Coins Game

Another game Played between two players. In this case, 17 coins are placed in a circle. A player can take one or two coins in her turn. In the case of the two, they must be next to each other, i.e., there must be no gap between them. Whoever takes the last coin wins. Like before, the game offers an advantage to one person. Who is it, and what is her winning strategy?

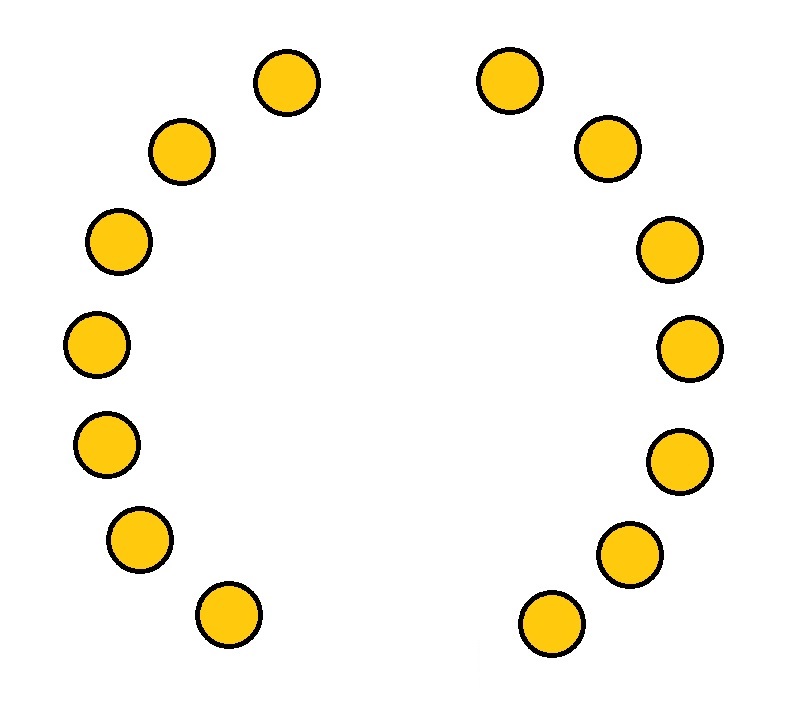

Well, the advantage is with the second person. Here is the strategy:

Case 1: the first person takes one coin

The second person must take two coins from the opposite side, breaking down the arc into two – with seven coins each.

From now on, the game is simple: copy what the first person does on one of the arcs to the opposite arc. Since your turn is second, you will be the one who ends the game.

Case 2: the first person takes two coins

The second person must also create two arcs with seven coins each by removing one from the diametrically opposite end. The rest is the same as before.

Can You Take the Final Coin? A Game Theory Puzzle: William Spaniel