Fallacy of Equivocation

A fallacy is a bad argument mistaken for a good one. A famous example of a fallacy is:

1. Computers are products of intelligent design.

2. The human brain is a computer.

Therefore, the human brain is a product of intelligent design.

It is easy to confuse the above argument with the following valid argument.

1. All A’s are B

2. x is an A

Therefore, x is B

So what is wrong with the brain-computer argument, which was right for the A-B? In the A-B statement, A and B must mean the same thing in both arguments. But in the brain-computer case, the term computer has different meanings in two premises. In the second premise (“the human brain is a computer”), the word computer is used broadly – as a system that performs computations.

It is equivocation, i.e., employing two different things by the same name. In the brain-computer argument, we equivocated the word computer to represent two different things that are broadly similar in two premises. In the first premise, the word computer means specifically artificial computers humans built. However, in the second premise, the word was used broadly as an information processing system.

Reference

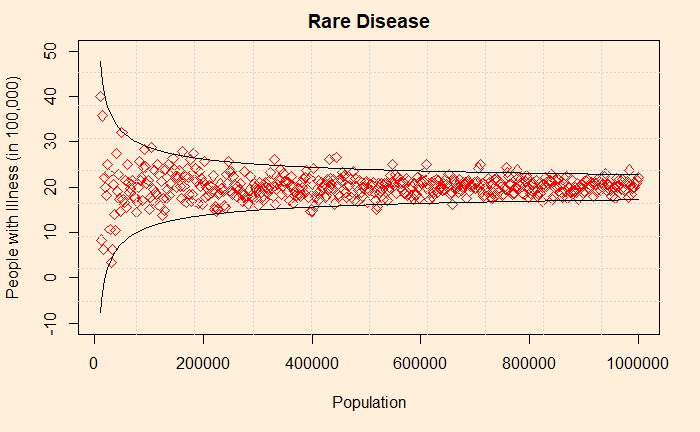

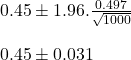

The Small Sample Fallacy: Kevin deLaplante

Fallacy of Equivocation Read More »