Viterbi algorithm

A doctor wants to diagnose whether a patient has a fever or is healthy. The patient can explain the conditions in three options: “Normal,” “Cold,” or “Dizzy.” The doctor has statistical data on health and how patients feel. If a patient comes to the doctor and reports “normal” on the first day, “cold” on the second, and “dizzy” on the third, how is the diagnosis done?

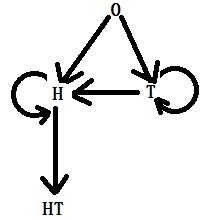

Before we get to the solution, let’s recognise this as a hidden Markov process.

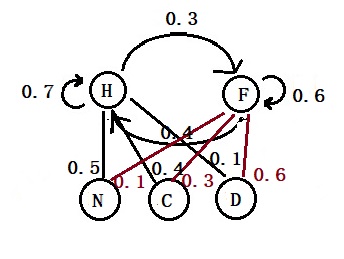

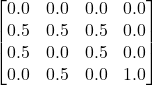

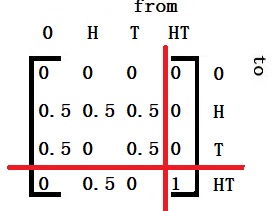

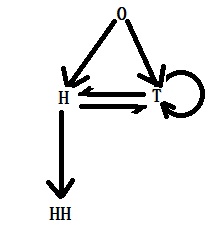

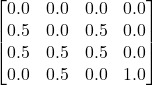

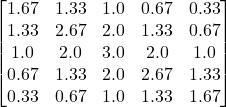

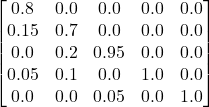

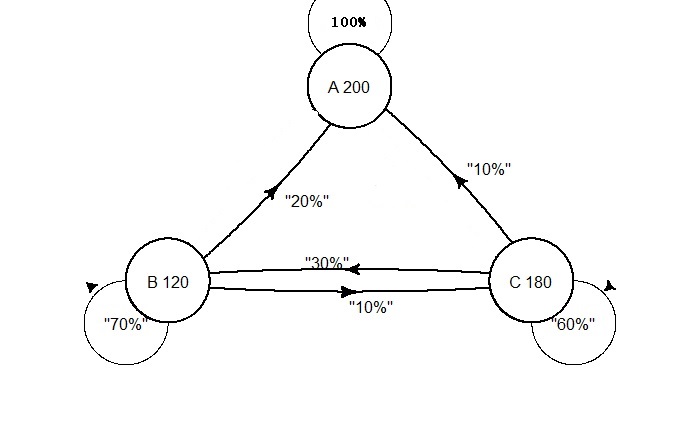

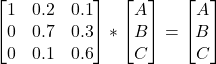

The hidden (latent) variables are “Fever” and “Healthy.” They have translation probabilities.

The observed variables are: “Normal,” “Cold,” and “Dizzy.” They have emission probabilities.

The Viterbi algorithm finds the recursive solution of the most likely state sequence using the maximum a posteriori probability. As the earlier post shows, the Bayes equation may estimate the posterior priority. As the objective is to find the maximum a posteriori, we require only its numerator in Viterbi. In our case,

P(H|N) = P(N|H) x P(H)

H represents “Healthy”, and N denotes “Normal”.

The steps are:

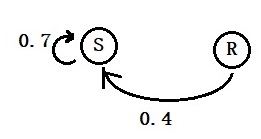

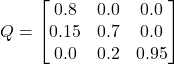

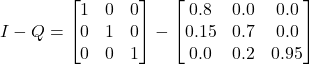

1) Estimate prior probabilities of being healthy (H) or fever (F).

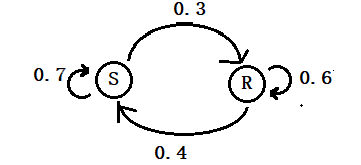

2) Calculate the posterior (the numerator) of day 1 using Bayes.

3) Compare the posteriors of the two posteriors (H vs F) and find out the most likely conditions.

4) Use it as the prior for the next day and repeat steps 2 and 3.

Here is the hidden Markov tree, and we will see the calculations next.