Probability of Circle in a Square

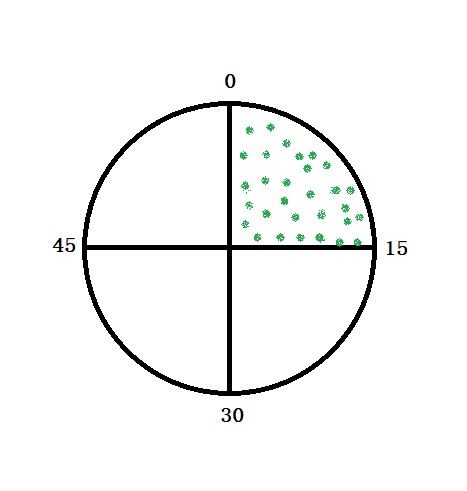

The probability of hitting inside the circle inscribed in a square is a well-known problem. Looking at the geometries, one can find that the required probability is pi/4.

If X is the radius of the circle, then the diameter, 2X, is equivalent to the side of the square. The required probability is the area of the circle (green) over the area of the square. I.e., pi x X2 / (2X) x (2X) = pi / 4. Or four times this probability is pi.

We will do an R code to simulate this case.

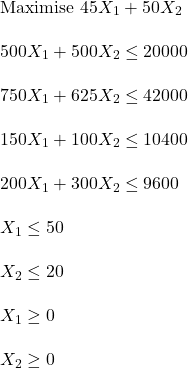

Step 1: Create random uniformly distributed points between two equal intervals (-1, 1) to produce a 2 x 2 square.

pts <- 10000

xx <- runif(pts, -1, 1)

yy <- runif(pts, -1, 1)

plot(xx, yy, asp = 1, col = "blue")

Step 2: Select only those points that satisfy the following conditions for a circle: X2 + Y2 < 1, 1 being the circle’s radius that fits inside the square.

for(i in 1:pts){

if(xx[i]^2 < 1 - yy[i]^2) {

xx1[i] <- xx[i]

}else{

xx1[i] <- 0

}

}

yy1[which(xx1 == 0)] <- 0

plot(xx1, yy1, asp = 1, type = "p", col = "red")

Combining two plots,

The ratio of the number of points inside the circle to that of the square multiplied by 4 is:

4*length(xx1[abs(xx1) > 0 ]) / length(xx)3.1456Tailpiece

Increase the number of points to 100,000, and you get a much cleaner picture:

Probability of Circle in a Square Read More »