SSP in CMIP6

A key feature of the climate report is the abundance of acronyms, and the title for today’s post is a deliberate attempt to introduce a couple of them!

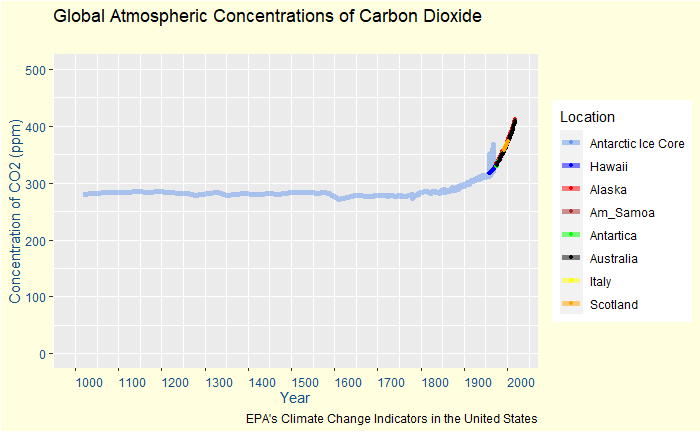

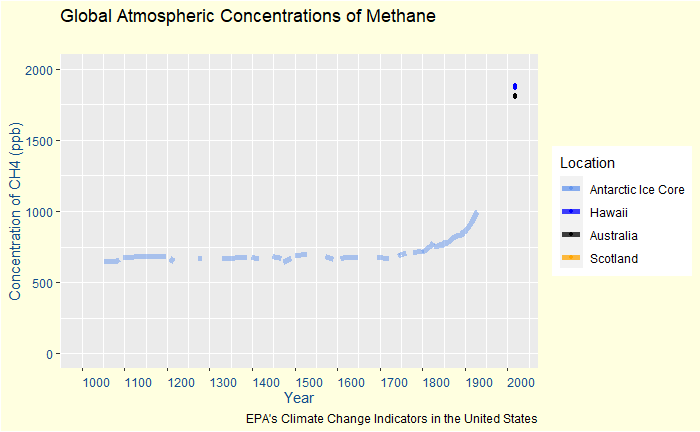

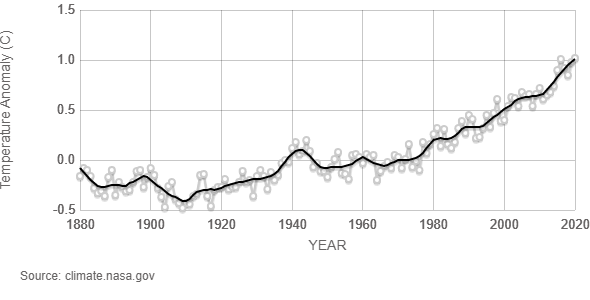

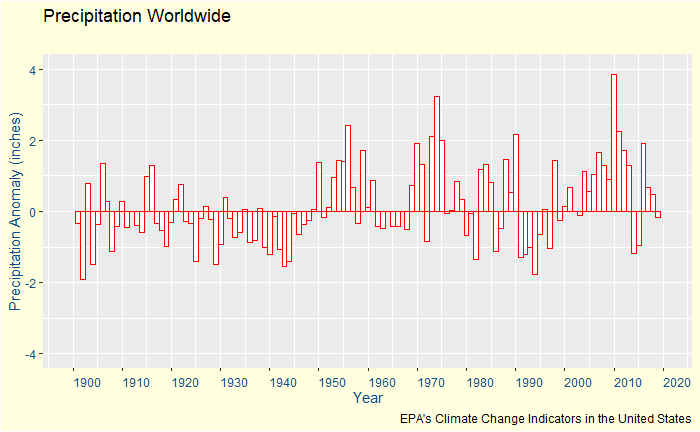

One of the goals for establishing a model framework is to forecast. And the prerequisite for reliable forecasting is a good fit with the historical data. We have seen in the previous post the importance of CMIP and the role of climate models to match the historical trends as much as possible.

SSPs

This projection of future scenarios is based on fives pathways, called Socioeconomic Pathways (SSP) – SSP1 through SSP5. These range from the mildest (impact to climate change) SSP1 (sustainable development) to the harshest SSP5 (high energy demand, fossil fuel development). These pathways are then combined with the global effective radiative forcing (ERF) values (W/m2) envisaged in 2100 to get the SSP matrix.

Representation of SSP scenarios

An SSP scenario is represented by the SSP pathway number followed by the 2100 forcing value. For example, the sustainable pathway at 1.9 W/m2 ERF is SSP1-1.9. Chapter 4 of the WG1 report of AR6 focusses on 5 scenarios SSP1-1.9, SSP1-2.6, SSP2-4.5, SSP3-7.0, and SSP5-8.5.

As per these scenarios, a 1.5 C increase of global mean surface air temperature (GSAT) is highly likely to occur in SSP5-8.5, likely to occur in SSP2-4.5, SSP3-7.0 and more likely than not in SSP1-1.9, SSP1-2.6 in the next 20 years!

Climate Reports: IPCC

Introduction to Climate Models, CMIP