The last time, we saw the expected waiting times of sequences from coin-flipping games. Today, we will make the necessary formulation to theorise those observations.

We have already seen how the expected values are calculated. In statistics, the expected values are the average values estimated by summing values multiplied by the theoretical probability of occurrence. Start with the simplest one in the coin game.

Consider a coin with probability p for heads and q (= 1-p) for tails. Note that, for a fair coin, p becomes (1/2).

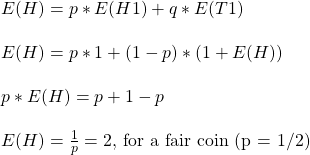

Expected waiting time for H

You toss a coin once: it can land on H with probability p and T with q or (1 -p). If it is H, the game ends after one flip. The value (for the time to wait) becomes 1, and the expected value, E(H), for an H start is p x 1. On the other hand, if the flip ends up with T, you start again for another flip. In other words, you did 1 flip, but back to E(H). The expected value, in this case, is (1-p)x(1 + E(H)). The final E(H) is the sum of each starting possibility.

This should not come as a surprise. Since p is the probability of getting heads (say, 1/2), you get an H on an average 1/p (2) flip if you flip several times.

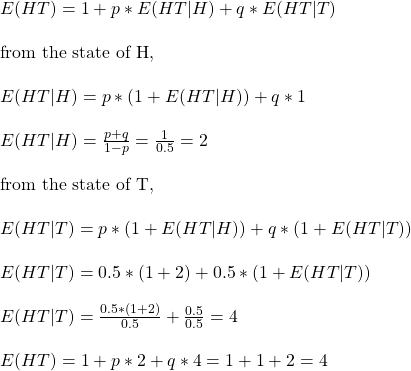

Expected waiting times for HT

We follow the same logic again. You made one flip (1), and you have two possibilities as starting – p x E(HT|H) and q x E(HT|T). HT|H means H followed by T given H has happened. For the second flip, you either start from the state of H or from the state of T.

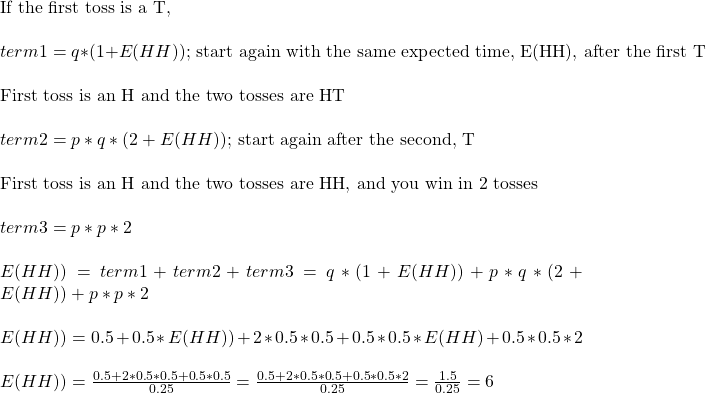

Expected waiting times for HH

We’ll use a different method here.